Best Infosec-Related Long Reads for the Week of 6/8/24

Immoral Pentagon disinformation campaign threatened Filipino lives, Volt Typhoon could lead to wartime disruption, Internet privacy's shifting baseline syndrome, LLM agent teams can exploit zero days more than half the time, LLM agents are capable of deception

Metacurity is pleased to offer our free and premium subscribers this weekly digest of the best long-form (and longish) infosec-related pieces we couldn’t properly fit into our daily news crush. So tell us what you think, and feel free to share your favorite long reads via email at info@metacurity.com.

Pentagon ran secret anti-vax campaign to undermine China during pandemic

In a blockbuster report, Reuters’ Chris Bing and Joel Schectman expose a devastating Pentagon disinformation campaign during the COVID crisis to discredit China’s Sinovac inoculation as payback for China blaming the US as a source of the deadly virus, subjecting one target, the Filipino public, to disease and death by sowing doubt about the safety and efficacy of vaccines and other life-saving aid.

The clandestine operation has not been previously reported. It aimed to sow doubt about the safety and efficacy of vaccines and other life-saving aid that was being supplied by China, a Reuters investigation found. Through phony internet accounts meant to impersonate Filipinos, the military’s propaganda efforts morphed into an anti-vax campaign. Social media posts decried the quality of face masks, test kits and the first vaccine that would become available in the Philippines – China’s Sinovac inoculation.

Reuters identified at least 300 accounts on X, formerly Twitter, that matched descriptions shared by former U.S. military officials familiar with the Philippines operation. Almost all were created in the summer of 2020 and centered on the slogan #Chinaangvirus – Tagalog for China is the virus.

“COVID came from China and the VACCINE also came from China, don’t trust China!” one typical tweet from July 2020 read in Tagalog. The words were next to a photo of a syringe beside a Chinese flag and a soaring chart of infections. Another post read: “From China – PPE, Face Mask, Vaccine: FAKE. But the Coronavirus is real.”

After Reuters asked X about the accounts, the social media company removed the profiles, determining they were part of a coordinated bot campaign based on activity patterns and internal data.

The U.S. military’s anti-vax effort began in the spring of 2020 and expanded beyond Southeast Asia before it was terminated in mid-2021, Reuters determined. Tailoring the propaganda campaign to local audiences across Central Asia and the Middle East, the Pentagon used a combination of fake social media accounts on multiple platforms to spread fear of China’s vaccines among Muslims at a time when the virus was killing tens of thousands of people each day. A key part of the strategy: amplify the disputed contention that, because vaccines sometimes contain pork gelatin, China’s shots could be considered forbidden under Islamic law.

The military program started under former President Donald Trump and continued months into Joe Biden’s presidency, Reuters found – even after alarmed social media executives warned the new administration that the Pentagon had been trafficking in COVID misinformation. The Biden White House issued an edict in spring 2021 banning the anti-vax effort, which also disparaged vaccines produced by other rivals, and the Pentagon initiated an internal review, Reuters found.

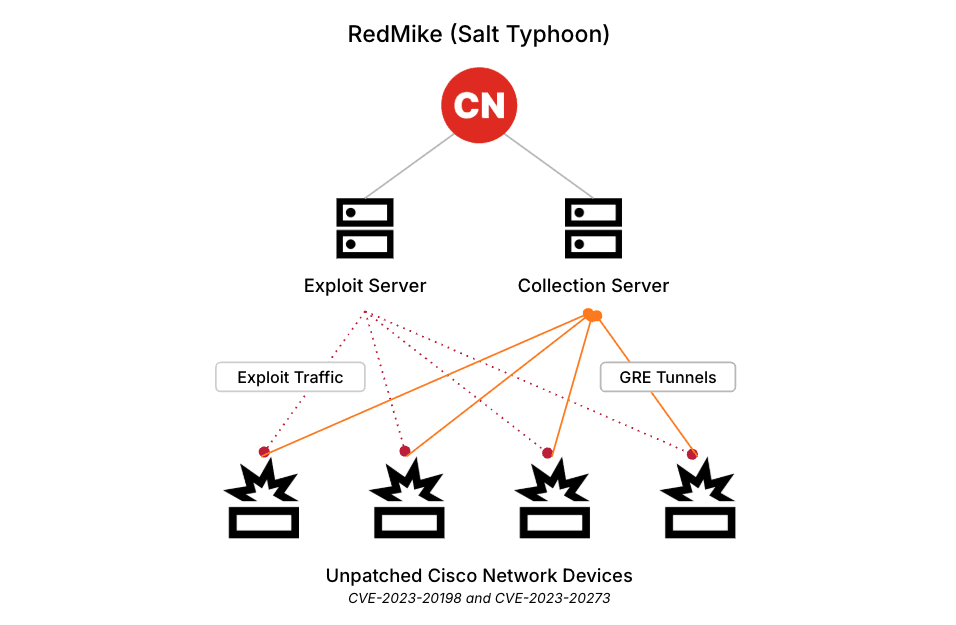

The new front in China’s cyber campaign against America

The Economist (subscription required) took a closer look at China’s Volt Typhoon cyber threat group, which represents a shift from the adversary’s traditional espionage focus into the destructive realm, potentially laying the groundwork for widespread disruption against critical infrastructure during a time of war.

The idea of penetrating critical infrastructure by cyber-means with a view to sabotaging it in wartime is not new. The American-Israeli “Stuxnet” attack which disrupted an Iranian nuclear facility in the late 2000s showed what was possible, as did Russian sabotage of Ukraine’s power grid in 2015 and 2016. China poked around American oil and gas companies as early as 2011. In 2012 researchers warned that Russian hackers had targeted over 1,000 organisations in more than 84 countries, including the industrial control systems of wind turbines and gas plants.

Volt Typhoon appears to be different. For one thing it is broader in scope. “[It] appears to be the first systematic preparatory campaign that would lay the foundations for widespread disruption,” says Ciaran Martin, who once ran Britain’s cyber-security agency. But it has also unfolded at a moment when war between America and China feels closer, and war in Europe is palpable. The gru, Russia’s military intelligence agency, has conducted relentless cyber-attacks on Ukraine’s infrastructure. Only a huge defensive effort, enabled by Western companies and allies, has protected Ukraine from the worst of that.

The Chinese and Russian campaigns also break with the past in another way. Traditional cyber-attacks would be associated with a distinctive signature, such as a particular sort of malware or a suspect server. These could be spotted by a diligent defender. Both Volt Typhoon and the gru have used stealthier methods. By directing attacks through ordinary routers, firewalls and other equipment used in homes and offices, they have made the connection look legitimate. One Chinese network alone used 60,000 compromised routers, says a person familiar with the episode. It was one of dozens of such networks. Both groups have also used “living-off-the-land” techniques in which attackers repurpose the standard features of software, making them harder to spot. In some cases, the gru has maintained access to Ukrainian networks for years, waiting patiently for the right moment to strike.

All of this has made Volt Typhoon “incredibly challenging” to hunt down, says John Hultquist of Mandiant, a cyber-security company that is part of Google. In response, America has gone after the hackers’ tools and infrastructure. In December the fbi disrupted hundreds of ageing routers built by Cisco and Netgear, a pair of American firms, which were being used by Volt Typhoon to stage attacks. The following month it did the same to hundreds of routers that were being used by the gru.

How Online Privacy Is Like Fishing

In IEEE Spectrum, Barath Raghavan, a computer science professor at the University of Southern California, and Bruce Schneier, a fellow at the Berkman Klein Center for Internet & Society at Harvard University, explain how internet privacy suffers from a “shifting baseline syndrome,” with experts failing to sufficiently flag continually eroding privacy levels because they begin comparison with already lowered baselines.

[Ocean scientist Daniel] Pauly called this “shifting baseline syndrome” in a 1995 paper. The baseline most scientists used was the one that was normal when they began their research careers. By that measure, each subsequent decline wasn’t significant, but the cumulative decline was devastating. Each generation of researchers came of age in a new ecological and technological environment, inadvertently masking an exponential decline.

Pauly’s insights came too late to help those managing some fisheries. The ocean suffered catastrophes such as the complete collapse of the Northwest Atlantic cod population in the 1990s.

Internet surveillance, and the resultant loss of privacy, is following the same trajectory. Just as certain fish populations in the world’s oceans have fallen 80 percent, from previously having fallen 80 percent, from previously having fallen 80 percent (ad infinitum), our expectations of privacy have similarly fallen precipitously. The pervasive nature of modern technology makes surveillance easier than ever before, while each successive generation of the public is accustomed to the privacy status quo of their youth. What seems normal to us in the security community is whatever was commonplace at the beginning of our careers.

Historically, people controlled their computers, and software was standalone. The always-connected cloud-deployment model of software and services flipped the script. Most apps and services are designed to be always-online, feeding usage information back to the company. A consequence of this modern deployment model is that everyone—cynical tech folks and even ordinary users—expects that what you do with modern tech isn’t private. But that’s because the baseline has shifted.

AI chatbots are the latest incarnation of this phenomenon: They produce output in response to your input, but behind the scenes there’s a complex cloud-based system keeping track of that input—both to improve the service and to sell you ads.

Shifting baselines are at the heart of our collective loss of privacy. The U.S. Supreme Court has long held that our right to privacy depends on whether we have a reasonable expectation of privacy. But expectation is a slippery thing: It’s subject to shifting baselines.

Teams of LLM Agents can Exploit Zero-Day

Researchers at the University of Illinois Urbana-Champaign showed that teams of autonomous, self-propagating Large Language Model (LLM) agents using a Hierarchical Planning with Task-Specific Agents (HPTSA) method can exploit real-world, zero-day vulnerabilities more than half the time, proving to be 550% more efficient than a single LLM in exploiting zero days.

In this work, we show that teams of LLM agents can autonomously exploit zero-day vulnerabilities, resolving an open question posed by prior work [5]. Our findings suggest that cybersecurity, on both the offensive and defensive side, will increase in pace. Now, black-hat actors can use AI agents to hack websites. On the other hand, penetration testers can use AI agents to aid in more frequent penetration testing. It is unclear whether AI agents will aid cybersecurity offense or defense more and we hope that future work addresses this question.

Beyond the immediate impact of our work, we hope that our work inspires frontier LLM providers to think carefully about their deployments.

Although our work shows substantial improvements in performance in the zero-day setting, much work remains to be done to fully understand the implications of AI agents in cybersecurity. For example, we focused on web, open-source vulnerabilities, which may result in a biased sample of vulnerabilities. We hope that future work addresses this problem more thoroughly.

Deception abilities emerged in large language models

Thilo Hagendorff of the University of Stuttgart conducted a study to test deception abilities in LLMs and whether they are capable of fostering false beliefs, finding that LLMs are capable of grasping the concept of false beliefs and that in state-of-the-art GPT models existing today, LLM agents are capable of deceiving other LLM agents.

While this study demonstrates the emergence of deception abilities in LLMs, it has specific limitations that hint at open research questions that can be tackled by further research.

1) This study cannot make any claims about how inclined LLMs are to deceive in general. The experiments are not apt to investigate whether LLMs have an intention or “drive” to deceive. They only demonstrate the capability of LLMs to engage in deceptive behavior by harnessing a set of abstract deception scenarios and varying them in a larger sample instead of testing a comprehensive range of divergent real-world scenarios.

2) The experiments do not uncover potential behavioral biases in the LLMs’ tendencies to deceive. Further research is necessary to show whether, for instance, deceptive machine behavior alternates depending on which race, gender, or other demographic background the agents involved in the scenarios have.

3) The study cannot systematically confirm to which degree deceptive machine behavior is (mis-)aligned with human interests and moral norms. Our experiments rely on scenarios in which deception is socially desirable (except in the neutral condition of the Machiavellianism induction test), but there might be deviating scenarios with different types of emergent deception, spanning concealment, distraction, deflection, etc.

4) Should LLMs exhibit misaligned deception abilities, a further research gap opens, referring to strategies for deception reduction, about which our experiments cannot provide any insights.

5) Finally, the study does not address deceptive interactions between LLMs and humans. Further research is needed to investigate how the conceptual understanding of how to deceive agents might have an effect on interactions between LLMs and human operators